Nvidia recently released a $1200 graphics card and the first one was sent to him.

US time On July 21, 2016, Nvidia released the latest graphics card Nvidia TITAN X with a price of up to 1,200 US dollars (more than 8,000 yuan), and gave him the first graphics card - Andrew Ng (Wu Enda).

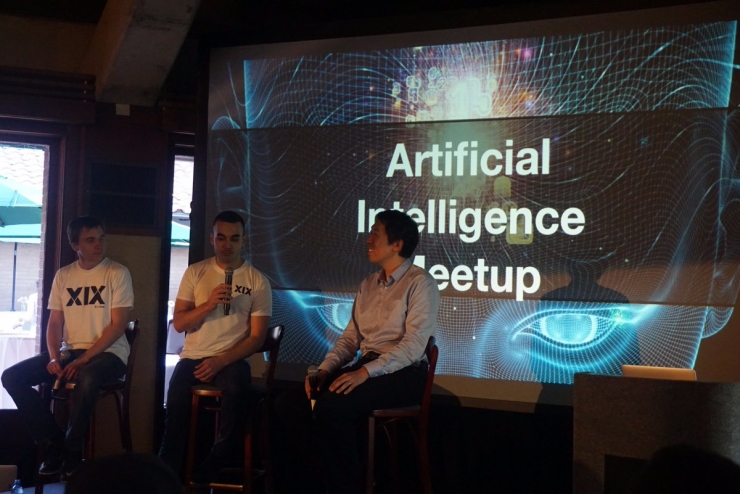

US time at 6:00 PM on July 21, 2016. At the Stanford Teachers’ Club, Nvidia held a small-scale ARIFICIAL INTELLIGENCE MEETUP with the Baidu American Institute. At the meeting, Andrew Ng was invited to the meeting. —Caidu is the chief scientist of Baidu’s American researcher and one of the founders of modern artificial intelligence research, as well as Bryan Catanzaro and Eric Battenberg of Baidu Silicon Valley Experiments.

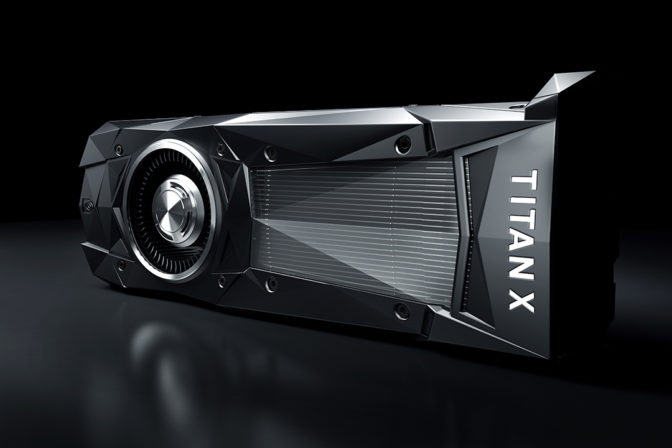

The latest graphics card Nvidia TITAN X was released at the conference. Its specific parameters are as follows:

11 TFLOPS FP32

44 TOPS INT8 (New Deep Learning Architecture)

12B transistor

3584 CUDA 1.53GHz Cores (Only 3072 1.08GH Cores in TITAN X)

60% faster than previous TITAN X

High-performance performance in the maximum overclocking state

12 GB of GDDR5X memory (480 GB/s)

In this press conference, Nvidia mentioned:

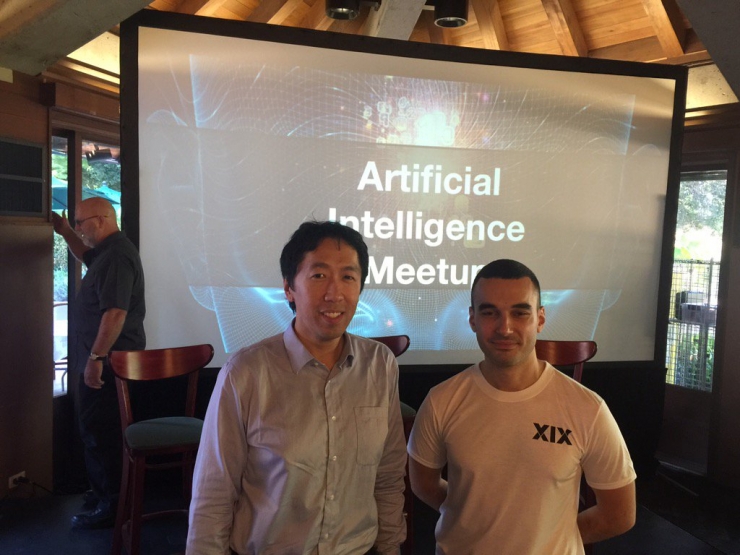

Everyone has his own hero, Andrew Ng, the forerunner of deep learning. The current chief scientist of Baidu American Researcher is one of our heroes. The current CEO of NVIDIA, Jen-Hsun Huang, chose to hold a small-scale exchange meeting with deep learning experts at Stanford University. At the conference, he released the most powerful GPU of the moment, and gave the first Nvidia TITAN X graphics card to Andrew Ng. (Wu Enda)!

It turns out that as early as 2012, Andrew Ng applied GPUs to artificial intelligence. Then use it to build the first deep neural network ever , and then train this AI neural network by watching 10 million YouTube videos . After viewing more than 20,000 different objects, artificial intelligence using deep learning algorithms began to recognize the "cat" picture. Andrew Ng (Wong Enda) mentioned in an interview that “ it is better to let a bunch of researchers try to find out how to find the edge of the image. It is better to simply throw a bunch of data into the artificial intelligence algorithm and let the software learn from the data itself. " After that, the use of GPUs in deep learning has continued to increase, and the speed of deep learning systems has increased by nearly 50 times.

Andrew Ng and other researchers have used GPUs to perform deep learning and have revolutionized the entire industry, so Nvidia believes the first TITAN X graphics card is very meaningful to Andrew Ng.

In the exchange meeting, Andrew Ng thought that just as the invention of electricity changed the entire industry 100 years ago and continues to bring about technological innovation, the next decade will see ARIFICIAL INTELLIGENCE continuing to change and innovate. Industry . However, in the ARIFICIAL INTELLIGENCE industry, it is necessary to use the most advanced research equipment. Andrew Ng believes that if you can have a 2x speed learning machine, your research will be twice as fast. Does this mean that the current deep learning has reached the level of hardware and financial resources?

At the same time, more than 500 scholars, researchers and students all gathered at the Stanford Teachers' Club. When Huang Renxun announced the release of the latest generation of GPUs, all of them became very excited and excited and immediately picked up their mobile phones for photo sharing. Why is this?

Because currently in the field of deep learning, GPUs play a very important role. In the past, a large number of CPUs and supercomputers were required to perform the calculations, and now only a few GPU combinations are required to complete them. This greatly accelerates the development of deep learning and provides a basis for the further development of neural networks. People who are familiar with deep learning know that deep learning requires training. The so-called training is to find the best value among thousands of variables. This needs to be achieved through continuous attempts to achieve convergence, and the final value obtained is not an artificially determined figure, but a normal formula. Through this kind of pixel-level learning, and constantly summing up the laws, the computer can realize thinking like a human being. Today, almost all deep learning (machine learning) researchers are using GPUs for related research.

Deep learning is the use of sophisticated multi-level "deep" neural networks to create systems that can detect features from a large amount of untagged training data. Although machine learning has been around for decades, there are two more recent trends:

Massive training data

Powerful and efficient parallel computing capabilities provided by GPU computing

These greatly facilitate the extensive use of machine learning. People use GPUs to train these deep neural networks, use much larger training sets, consume significantly less time, and occupy far fewer data center infrastructures. GPUs are also used to run these machine learning training models to classify and predict in the cloud, enabling them to support much larger amounts of data and throughput than ever before with less power and infrastructure.

Early users of GPU accelerators for machine learning included a large number of web and social media companies, as well as first-rate research institutions in the fields of data science and machine learning. Compared to the simple use of the CPU, GPUs have thousands of compute cores and can achieve 10-100x application throughput, so the GPU has become a processor for data scientists to handle big data. For example, the following figure uses GPU to accelerate deep learning compared with the CPU:

Professor Ian Lane - Carnegie Mellon University

With GPUs, the transcription speed of pre-recorded voice or multimedia content can be dramatically increased. Compared to the CPU software, the task of performing identification tasks is as much as 33 times faster.

In these areas, GPUs are indeed very suitable, which is reflected in all the big players in these industries such as BAT, Google, Facebook, etc. are all using the GPU to do training. Intensive neural network training requires a large number of models before they can achieve mathematical convergence. In order to truly approach the intelligence of adults, deep learning requires a very large number of neural networks. It requires more data than language recognition and image processing. It is hoped that Andrew Ng will receive this gift. It can then be used to further accelerate the development of deep learning.

At the same time, some people think that although the use of GPUs for deep learning has made a tremendous contribution to the field of artificial intelligence, it has also caused almost all relevant scholars to pursue deep learning. It is true that using more advanced GPUs and faster computing speeds can achieve better experimental results than previous generations, and it is easier to publish articles, but is this the best for the progress of the entire industry? This kind of concept does not have to make people think about which direction the future of deep learning should go.

PS : This article was compiled by Lei Feng Network (search “Lei Feng Network†public number) and it was compiled without permission.

Via Nvidia Blog Twitter